Matt Paterson, Machine Learning Engineer

January 14, 2022

Background

In a blogpost from last summer, “Creating Simplicity in the Workplace with NLP”, I talk about using Natural Language Processing (NLP) to route or dispatch incoming emails to their appropriate recipients. We decided that an interactive demonstration of our SmartDispatch system would be more useful to our customers than an essay alone. This paper will walk you through how I went about building:

- A dataset to use for NLP Machine Learning Model Training

- A fine-tuned BERT model from the HuggingFace Library

- A Deep Learning Neural Network for model training

- A PyTorch Model Artifact to use for topic predictions

- A PyTorch Model Artifiact to use for sentiment analysis

- A RESTful API built in FastAPI to deploy the models

- A front-end interface to display this functionality built in Streamlit

- A plan for the model to improve over time after deployment

The Failed Dataset

I first wanted to find a good sampling of business emails in order to train the model. Since our clients will be mostly from small and medium sized businesses, where emails are likely to not be tagged for relationships with internal teams or departments, I wasn’t necessarily looking for a labelled dataset.

What are labels?

By “labelled” dataset, I’m referring to a set of emails or text messages that are each labelled with their topic or business department or function. With these two data points for each observation, the message and the label, I can train a model to know what marketing emails look like versus what an IT Helpdesk email looks like. A functioning model would then be able to predict the email or text message’s topic from a list of several options.

But those Emails…

In a cursory search, I quickly found the Enron Email dataset via the Kaggle website. This set of over a half million unlabelled emails became the foundation of our research. However, this proved problematic.

A big problem was that the emails in this corpus were predominantly interpersonal communications between colleagues. Since our end-goal is to create an email interpreter that will surmise the topic, tone or sentiment, and possibly the level of importance of an email as it enters the general inbound queue for a customer service team, we needed to be able to find clear differences in the text that could be coded.

But it’s a computer?

There’s a saying in ML that if a human can do it, then a computer can do it too. While not reflexive in every case, if a human cannot create a delineation between two written messages, then our current NLP capabilities in 2022 cannot do so either (ie., if I can’t understand your text message then Artificial Intelligence can’t do it either). Thus, rather than clear signals to indicate meaning, these interpersonal emails only added noise to our model.

Another big challenge with using this unlabelled dataset was our strategy for labeling it. Obviously, I couldn’t read 500K emails and label each as one of 5 categories by hand, that would take an inordinate amount of time. We thought about bringing on interns from the local University, but we decided that simply reading and labelling emails wasn’t a good use of anyone’s time, student or professional.

The LDA Model

There exist a number of unsupervised learning methods to deal with such a challenge. The NLP solution that is the most popular for this right now is called LDA, which stands for Latent Dirichlet Allocation. LDA is effective at creating clusters within a corpus of text by selecting similarities in the vectorized text, and then grouping text together into like categories.

A Cluster What-Now?

Clustering is a process by which the computer looks at similarities between data observations, in this case emails or text messages, and groups masses of them together in like-clusters. We do a similar thing in bookstores when we put the gardening books in one section and the sci-fi in another, and the poetry in yet another part of the bookstore. You can also think of how kids group up on a playground, or how in a city of a hundred thousand people you’ll find that the different bars each attract their own particular type of person. It’s like that.

Ready-made microservices

AWS has an LDA model at the ready in their microservice called Amazon Comprehend. While I later coded up my own LDA in order to have more autonomy over the fine-tuning of the model, I started off by asking the Comprehend application to label our 500K emails first into 3 subjects and then into 5. I actually repeated this step a few times as each individual pass of the clustering model can result in slightly different groupings of the text.

The Data Scientist can choose the number of clusters, but to actually interpret and label them, it often takes a solid and focused human eye to read tens of emails in order to interpret how the model had clustered the texts. In one trial I clearly discerned a “Regulatory” category, a “Sales” category, a “Meetings/Travel” category, and a separate “Legal” category, leaving all else labelled “Interpersonal”. As sure as I thought I was here, there is no guarantee that your first or second or twelfth attempt will result in a reliably labelled dataset.

Death by Confirmation Bias

Often in our trials when it appeared that we were successful in labeling the emails with this approach, we would find after a day’s rest that our best clusters were a result of chance and confirmation bias in the sub-sample that I reviewed, and further the conclusions that I made didn’t necessarily map to the whole cluster.

To put it another way, I had seen the face of Keanu Reeves on the side of a building in San Francisco and thought that I’d discovered the Matrix (the movie not the nightclub). There were no clear labels for these clusters, I was only willing them to appear in front of me. Just as our eyes and our minds can play tricks on us (there is no spoon), our efforts to be good scientists can rival our human need to be successful creators (if my mixed metaphors were too much, there were no clear labels for the clusters in my trials after all).

Alternative Dataset

Given this realization, the second phase of our process involved shifting gears. This is where I found our winning dataset using the python Requests library to create a labeled dataset though an API to access social media posts.

Leaning on Past Experience

One of my prior projects in NLP involved using a free public API to pull posts and comments from social media sites in order to build a labelled dataset. Since I had this experience already, I went ahead and built a program that would hit the API and pull down about 80K posts and comments from 16 different labelled threads. I then labelled each with one of five topics, depending on which thread the message came from: “MachineLearning”, “FrontEnd”, “BackEnd”, “Marketing”, or “Finance”.

Good Data In -> Good Predictions Out

This proved to make a much more accurate predictor in the end. Essentially, we want to use natural, conversational language to train a model on the sequences of words that tend to be members of each of the aforementioned classes. For example, the goal is to train it to “know” the difference between the following:

- { ‘label‘ : ‘MachineLearning’,

- ‘Message’ : ‘We’re building a model in PyTorch to predict the topic of emails’}

VERSUS

- { ‘label‘ : ‘Marketing’,

- ‘Message’ : ‘We’re building our emails with drawings modeled on the Olympic Torch’}

Both of these messages contain the keywords “We’re”, “building”, “model”, “emails”, and “Torch”, yet each is clearly part of a different silo of the business.

To achieve our goal, we didn’t necessarily need to start with actual emails as our dataset. Instead, I could build a labelled dataset of key topics using an already-labelled source of natural, conversational language. Since the social media threads were already labelled with words such as “reactjs” or “javascript” or “webdev”, I could then put several of these together, in this case given the label of “FrontEnd”.

The NLP Transformer Model using Deep Learning

Finally, I took the 80K labelled entries, known as “documents” in the parlance of NLP, and used them to fine-tune a pre-trained Transformer model known as BERT. I did this thanks to the HuggingFace Library of models and algorithms (https://huggingface.co/models).

While our model is 80% accurate with 80% average precision at predicting each of the 5 topics, I could greatly improve the accuracy of this model by scraping more data, evening out the sample imbalance, and adjusting other hyperparameters in our training job.

We built our 5-class Classification Model by utilizing a process in Machine Learning known as Transfer Learning to fine-tune a pre-trained model. In our case, we utilized BERT, a model that Google pre-trained on over 3 Billion words from a couple of sources including Wikipedia to learn word embeddings and relationships through Bi-Directional Encoding using Transformers. (BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, 2019, Devin, Chang, Lee, Toutanova, https://arxiv.org/pdf/1810.04805v2.pdf)

With the help of the Huggingface Transformers Library, we built out a custom library in PyTorch that I named Hermes after the Messenger of the Gods. As mentioned above, we began work with an LDA model in order to create labels for data that was previously unlabelled, and tried to apply this newly-labelled dataset first. This proved problematic for Hermes as the results were never better than a 42% accuracy, which is barely above a baseline model or null model. To clarify, in data science a baseline model, or null model, is the simple average (mean) of the target variable.

Put more simply, if I have 3 topics, “A”, “B”, and “C”, but my model always predicts that everything I put in will be part of topic “B”, then my model will be correct 33% of the time. If I have 5 topics and predict the same one each time, then my model will have 20% accuracy.

Since the first dataset, the Enron Emails, did not offer enough differences in their embeddings to fine-tune a pre-trained model with any reliable accuracy, we turned to our alternative dataset, the social media posts and comments described above.

Using my HermesDataset object, a custom built PyTorch Dataset (if you’re geeky, this inherits from torch.datasets.Dataset) and the HermesPredictor library, I was able to create a custom PyTorch model artifact that predicted with reliable accuracy.

There are many like it but this one is mine

My model, called HermesModel_80.pt, can ingest a vectorized message and predict that message’s topic from the 5 options with 80% accuracy and 80% average precision. I know, it’s pretty cool, right? After training the model on a GPU instance through a Deep-Learning optimized Amazon SageMaker Notebook Instance (p3.xlarge), I saved my model artifact to an S3 bucket so that I could access it later on a much smaller instance kernel and use it for inference.

While a fully-functioning and deployed model inside of a customer’s email queue could be trained to well above 90% accuracy using a larger training set, more hyperparameter tuning, and better control for pre-training sample imbalance, I’m very happy with the resulting application. I also used a sentiment analysis dataset that I have used in prior scholastic work, and I ran that dataset through the HermesPredictor library in the same manner to create a 90% accurate sentiment analysis model called HermesSentiment_90.pt.

Amazon Built-in Options

Amazon recently has created its own wrapper to fine-tune pretrained Transformer models using the Huggingface Transformers and other libraries. You can now use the SageMaker SDK, or utilize Amazon Sagemaker console, to deploy a pre-trained BERT model from the Huggingface library onto your dataset.

Unfortunately for me, in the short time I had to work on this project I did not find a good resource for building a data pipeline from raw text to a pytorch Dataset object that could be fed into the dataloader in a format readable by the BERT model. Thus, I chose to use native PyTorch outside of the sagemaker SDK this time, though I still called on Amazon’s SageMaker Notebook instances to utilize the CUDA GPU’s required by PyTorch.

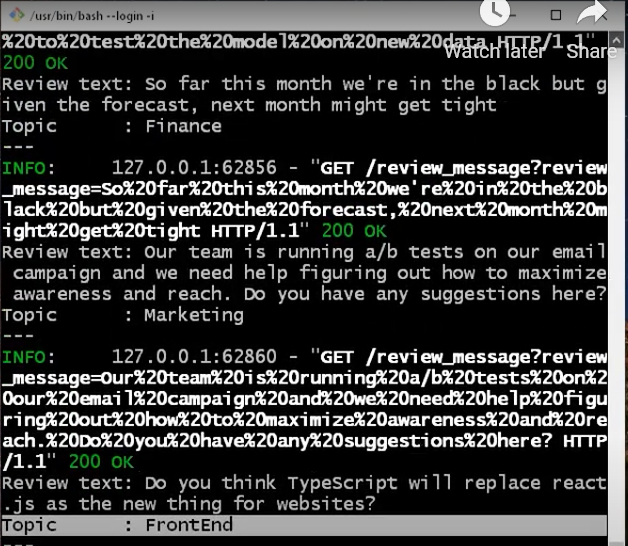

The API

In order for us to put this model into production, it was necessary to create a RESTful API which could serve our model. According to wikipedia, “The REST architectural style emphasizes the scalability of interactions between components, … to facilitate caching components to reduce user-perceived latency, enforce security, and encapsulate legacy systems”. Thus it allows us to run inference on our model in the cloud and frees up processing time and reduces latency on the client side.

Jumpin Jack FastAPI

For this purpose I chose to use FastAPI as it is a 100% python solution. A lot of documentation around RESTful API’s on the internet are written with node.js or JavaScript programmers in mind, and for good reason–JavaScript is the ubiquitous language of our modern internet. However, as a Machine Learning Engineer who knows very little about utilizing the Document Object Model (that’s what our Front-End Devs are good at!), I appreciate a framework that allows me to build the API in a very short time so that my model can be utilized faster in a production capacity. Think of a much faster time to POC, and you can bring in the paid web devs if the model is promoted to MVP.

Why So Fast?

FastAPI is also fairly lightweight and can run locally or in the cloud, serving inference from both models with minimal latency. We would increase the response speed of our downstream application by creating separate API’s for each model, possibly even creating a third API to handle the text encoding, and then running them from separate servers or separate virtual machines in kubernetes or docker containers, but our goal for this exercise is to create a demonstration application so for now, a two or three second lag-time requires no further re-tooling.

Also, the majority of our use-cases for this tech will occur under-the-hood of one of our customer’s enterprise email systems, although through putting our model into a FastAPI architecture, we could repurpose the model to work in chatbots, document review systems, or even internal help queues that review documentation for easy solutions.

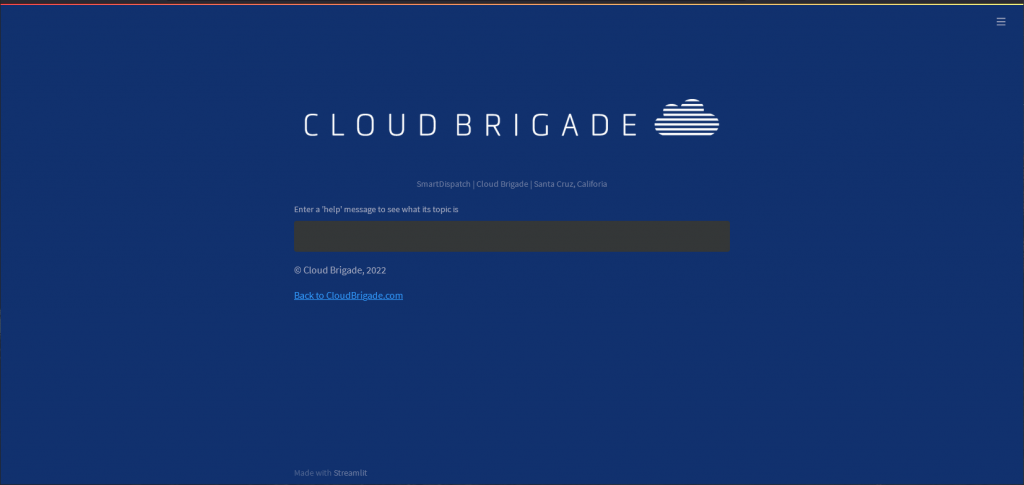

The GUI Application

Finally, to give us humans a way to work with our API, I created a front-end application, or what is called a Graphical User Interface (GUI) using the Streamlit library in python. Streamlit employs some pretty easy pre-made HTML and Javascript to produce a front-end application that I can write entirely in python. As the developer, I only need to know the Python to use Streamlit.

As a Machine Learning Engineer, this allows me to iterate quickly, to develop my application on my own machine without the need for extra overhead, and it lets me produce this application without taxing the design or development team. Once a client asks us to build them a custom solution, the front-end team can then go to work to marry my API and Data Science Model to the customer’s color schemes, look-and-feel, and embed that naturally into existing software for their users if they want a customer-facing interface.

My Streamlit application can also be served from the same server or virtual machine that I serve the API from, however doing so can add latency since the server can only do one thing at a time–running code for the front-end app, the API, and inference against the endpoint sequentially.

A lifetime-learner

In our current era, we expect all new AI to be more sentient than it actually is. As such, we expect that any new inference device like SmartDispatch will improve over time. We see this in our smartphones as the voice assistant application will start to recognize our accent or dialect and make better recommendations the more we talk to it.

To add this functionality to the SmatDispatch App, there are several strategies that we can employ. One would be adding each incoming message as a new labeled data point and fine-tuning our model periodically over time. For this we would also use a feedback loop from human users that review the emails (known as “Human-In-The-Loop”). A second strategy would include pulling more labelled social media posts over time to pick up conversations about new technology or evolving nomenclature in each of the topic classes.

The final strategy would be a combination of these and and steps in what is called an ensemble model. We would create an algorithm that couples this ensemble with a heuristic that measures the accuracy, recall, and precision metrics of the subsequent models and chooses the champion model to automatically deploy back into the app. Of course, just as human beings need an annual checkup by a physician, a production model requires regular model maintenance to detect and handle model drift by a Data Scientist, and Cloud Brigade can be there for this regular checkup.

Conclusion

In this paper I’ve walked you through my process to

- Create and label a sample dataset

- Train and save a deployable Machine Learning Model artifact in PyTorch

- Build and deploy a RESTful API in FastAPI to serve the model

- Build and deploy a Front-end application to showcase this to our customers

Whether you’re a little nerdish, or a full-silicon-jacket nerd like me, I hope that you’ve enjoyed this read and that you’ll consider the expertise that Cloud Brigade can lend to help your business use AI to modernize and stay ahead of the competition.

Visit http://smartdispatch.cloudbrigade.com:8501/ to see and use the demo app!

WHAT’S NEXT

If you like what you read here, the Cloud Brigade team offers expert Machine Learning as well as Big Data services to help your organization with its insights. We look forward to hearing from you.

Please reach out to us using our Contact Form with any questions.

If you would like to follow our work, please sign up for our newsletter.