It was November 2016, I was listening to public radio on my way to work. Our president elect was already known for his use of Twitter and reporters were discussing their challenges, grappling with what they should or should not report on. At that time there was a growing feeling that those tweets often distracted from more significant news.

Fake News was discussed daily, and I had been thinking about this problem for a few months. On a whim I thought, what if we created a website that allowed the public to rate our president’s tweets as true or false? It would be simple to build and integrate with twitter and maybe it would provide a benefit to the public. Then I thought I’d probably receive a cease and desist in about 5 nano-seconds, so I tabled the idea.

The following Monday during my 40 minute commute, I had come up with a better idea. What if we provided a platform that used “crowdsourcing” to rate news articles? The existing systems, primarily websites like Snopes, simply could not scale in response to the amount of “news” coming from thousands of websites.

You Debunk It was born

Of course there are many considerations that would determine if this would actually work. First, would the general public be willing to invest their time into rating news articles? How would we keep this tool unbiased, non-partisan, and prevent it from being gamed by individuals or bots? Within an hour I had drafted a project specification and taken it to our software development team to discuss.

We looked at several existing “design patterns” which were used successfully on other websites with an engaged community. As we roughed out our user interaction model, we combined these patterns to provide a system of checks and balances that would ensure there was no single source of truth in the rating system, and to minimize bias as articles were rated.

Finding Inspiration

We believed that a portion of our public, say 5%, was invested enough in the pursuit of truth to spend time rating articles. This type of engagement was evident in communities such as Reddit, Quora, Stack Overflow, Slashdot, and others. These sites provided the ability for questions to be answered, discussions to ensue, up/down voting, karma scoring, and flagging for abuse or spam.

We set out and built our web based application with :

- News article submission system

- Multi-factor signup and verification system

- Searchable list of articles

- Article ratings with threaded comment sections

Will They Use It?

With the proof of concept built and tested, we realized we faced a number of problems with adoption. Without enough articles, it would be difficult to ramp up adoption. The bigger problem was getting people to remember to use our website when reading news. We needed a way to remain relevant daily.

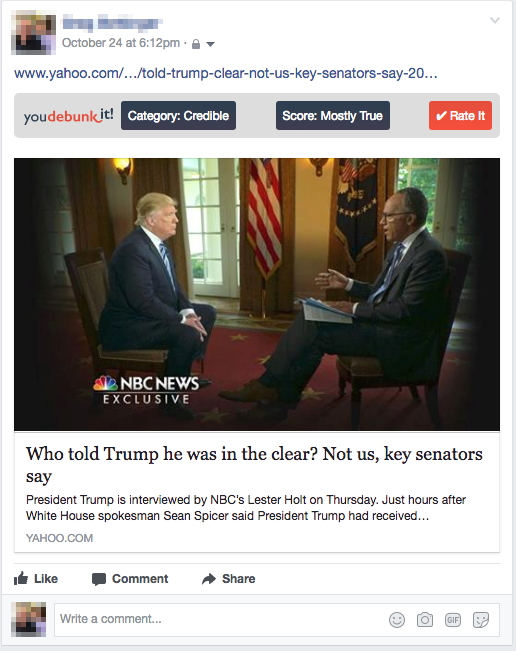

We considered that most news consumption was happening within social media sites such as FaceBook and Twitter. If we wanted to keep the public engaged, we needed to be part of the social media sites. We assumed FaceBook was not going to be open to this idea, so we went rouge and built a browser plugin.

Hacking FaceBook

The problems with this solution were that the list could be biased, and it only rated domain names. In reality, any website was capable of publishing inaccurate news, and we needed to be granular to the article level. A plugin that could hijack FaceBook’s news feed and overlay content was the feature we were after.

So we set out to build our own browser plugin which looked at the type of content posted in the FaceBook feed, and overlay our own toolbar with a category and truth score (if available), and a button a user could click to rate the article. This would drive user engagement, just what we needed. If FaceBook sent us a cease and desist, so be it. We provided an optional user installed plugin, and I suspected all they could really do is constantly change their HTML markup to thwart our plugin.

Great Responsibility

In addition to providing news ratings from our database if the URL existed in our database, this also gave us unprecedented access to composition of every user’s FaceBook feed, and allow us to gain insights that only FaceBook could see. Are you freaking out about privacy right now? We were too, and the Cambridge Analytica data scandal was still a year away from going public.

We had legitimate reasons for collecting this data in order to provide an effective tool which understood the news and social media landscape beyond what our users took the time to submit. It would also give us access to time and geographic data that would show how fake news spread across the internet.

Data is Scary

If you didn’t already know this, any browser plugin you install has access to your browser history in real time and can transmit that data to a remote server. While this is scary, the data can be used for a multitude of legitimate and beneficial outcomes. We now had a huge responsibility on our shoulders.

If users were going to adopt our application, they would first and foremost need to trust us. We couldn’t sugar coat it, we had to proactively disclose what data we were collecting and how we would use it. We also had to secure and randomize this data, including the user’s FaceBook ID which we used to prevent duplicate submissions from a single person.

Of course there were a number of other technical challenges, such as keeping the platform fast, and managing the massive amount of data we would receive, not to mention very bursty traffic patterns. You can read about the architecture of the application here.

How It Works

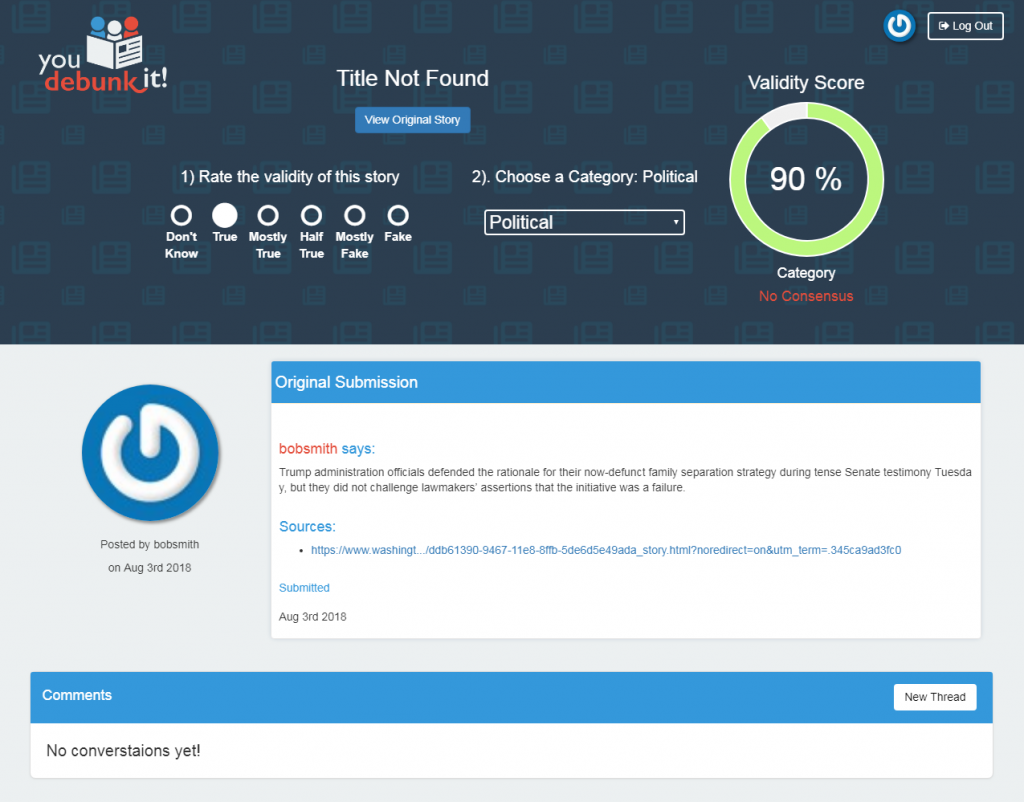

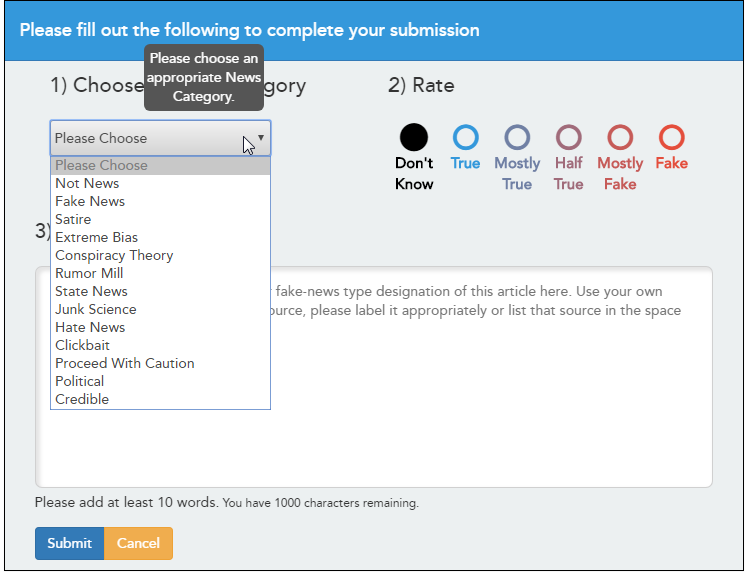

When an article was submitted to the system, the poster was required to provide a rating consisting of :

- A category (inspired by BSDetector)

- A 5 point truth score (inspired by Politifact)

- A short narrative supporting this rating with at least one valid source (inspired by SlashDot)

In order to avoid voting bias, the category and rating were hidden from the public until rating consensus was achieved by a quorum of users.

When additional posters submitted a rating, they were required to agree or disagree with the original poster’s narrative and source (inspired by Stack Overflow). Optionally they could engage in discussion and those comments could be up-voted or down-voted (inspired by SlashDot, Reddit, Quora)

Users would be assigned a hidden karma score based on their engagement on the site, and taking into account all of their interactions. This would serve to provide a weight in how their ratings would be interpreted and trusted. Karma was a way not only to combat trolls and bots, but also deal with reasonable people who might have been “triggered” when posting on the site.

Another design consideration was that we didn’t know what we didn’t know. Over the lifetime of the application, our algorithm would undoubtedly change. Because we needed our system to provide consistency across all article ratings, we would preserve and protect all original user submitted data, and retroactively recalculate ratings when we changed our algorithm.

What happened to You Debunk It?

We were inspired to create a tool that contributed to the common good. It was a tool we invested tens of thousands of dollars into, time that would have otherwise been spent on client projects. Because there was really no viable business model behind the application, it became a social experiment, and a mock software project for some of our junior employees.

We looked at the possibility of funding from the usual suspects who support sites like Snopes, and Politifact, but this project was basically going to require a full time team to build and maintain. We just didn’t see a funding model that would support this for the long term.

Why was our Fake News app a horrible idea? As we approach the 2020 election, we’ve learned a lot about our society in the last four years. There is an abundance of information available to support any type of bias, and this has resulted in endless arguments on social media, each party believing they are correct.

We built this tool so people had a chance to think before they shared fake news in a headline triggered world. What we’ve learned is that the people who need to fact check the news won’t.

The unfortunate reality is that we live in a society in which many people simply don’t care about the truth, they only care about supporting their own bias. Can we fix this with technology?

Chris Miller

Founder, Cloud Brigade

You Debunk It is Dedicated to Greg Bettinger